The terrific Garbage Collector in the .NET runtime is a compacting one. This means it moves memory blocks in use closer to each other during garbage collection, thus increasing the overall size of contiguous free memory and lessening the chance of running out of memory due to heap fragmentation.

This is pretty important for memory intensive applications as it is a nice guarantee that free contiguous memory will always be available whenever a large object needs to be allocated.

Whenever you perform send or receive socket operations in .NET, the buffer that holds the transmitted or received data is pinned by the .NET runtime. Pinning basically means that the region in memory that holds the data is locked down and is not eligible to be moved around by the Garbage Collector. This is necessary so that the Windows Socket API which handles the actual socket operation (and lives outside managed code) can access the buffer memory locations reliably. This pinning takes place regardless of if the operation is synchronous or asynchronous.

If you are building a memory intensive server application that is expected to serve thousands of clients concurrently, then you have a simmering problem waiting to blow up as soon as the server starts serving a non-trivial number of clients.

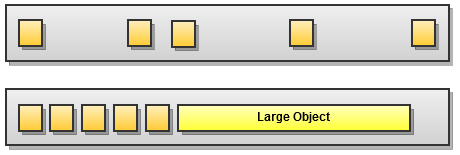

Thousands of sockets performing receive and/or send operations will cause thousands of memory locations to be pinned. If your server stores a lot of data in memory, you can have a fragmented heap that even though collectively has enough free memory to store a large object, is unable to do so, because there isn’t enough memory in a contiguous free block to store it. This is illustrated below:

The diagram above depicts the common scenario where a large object is about to be allocated in memory. There is insufficient contiguous memory to store the object, which triggers garbage collection. During garbage collection, the memory blocks in use are compacted, freeing enough room to store the large object.

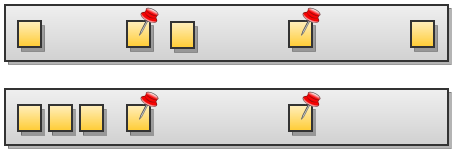

The diagram above depicts the same scenario with some objects pinned. The garbage collector compacts objects that are not pinned which makes some room, but not enough room due to the unmovable pinned objects. The end result is that a nasty Out Of Memory exception is thrown by the runtime because there is no contiguous free memory space large enough for the large object to be allocated.

This problem is discussed at length in this blog post and in this blog post. The solution to the problem, as noted in those posts, is to pre-allocate buffers. This basically means setting up a byte array which your sockets will use to buffer data. If all your socket operations use buffers from the designated byte array, then only memory locations within the region where the byte array is stored will be pinned, giving the GC greater freedom to compact memory.

However, for this to work effectively with multiple asynchronous socket operations, there has to be some kind of buffer manager that will dish out different segments of the array to different operations and ensure that buffer segments allotted for one operation are not used by a different operation until they are marked as no longer in use.

I searched for existing solutions for this problem but I couldn’t find any that was sufficiently adequate, so I wrote one that was robust and dependable. The solution is part of my ServerToolkit project, which is a set of useful libraries that can be used to easily build scalable .NET servers. The solution is called BufferPool and is the first ServerToolkit sub-project.

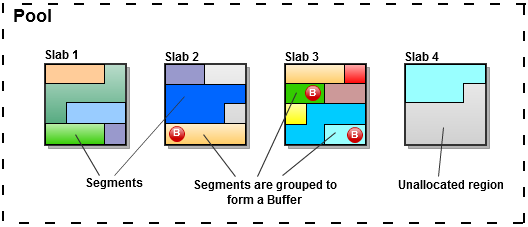

The idea is that you have a buffer pool that is a collection of memory blocks (byte arrays) known as slabs that you can grab buffers from. You decide the size of the slab and the initial number of slabs to create.

As you request buffers from the pool, it internally allocates segments within the slabs for use by your buffer. If you need more buffers than are available within the pool, new slabs are created and are added to the pool. Conversely, as you dispose your buffers, slabs within the pool will be removed when they no longer contain any allocated segments.

USING BUFFER POOLING IN YOUR APPLICATION

Download the code from http://github.com/tenor/ServerToolkit/tree/master/BufferPool

Compile it to a DLL and reference the DLL from your project

OR

Add the project to your solution and reference the ServerToolkit.BufferManagement project from your main project.

In the code file that performs socket operations, add

using ServerToolkit.BufferManagement;

Create a buffer pool when your application starts with

BufferPool pool = new BufferPool(1 * 1024 * 1024, 1, 1);

This creates a pool that will have 1 MB slabs with one slab created initially. Subsequent slabs, if needed, will be created in increments of one.

It is advisable to create the pool as soon as your server application starts or at least before it begins any memory-intensive operations.

To use the pool in synchronous send and receive socket operations

/** SENDING DATA **/

// const int SEND_BUFFER_SIZE is the desired size of the send buffer in bytes

// byte[] data contains the data to be sent.

using (var buffer = pool.GetBuffer(SEND_BUFFER_SIZE))

{

buffer.FillWith(data);

socket.Send(buffer.GetSegments());

}

/** RECEIVING DATA **/

// const int RECEIVE_BUFFER_SIZE is the desired size of the receive buffer in bytes

// byte[] data is where the received data will be stored.

using (var buffer = pool.GetBuffer(RECEIVE_BUFFER_SIZE))

{

socket.Receive(buffer.GetSegments());

buffer.CopyTo(data);

}

To use the pool for asynchronous send and receive socket operations

Sending data:

/** SENDING DATA ASYNCHRONOUSLY **/

// const int SEND_BUFFER_SIZE is the desired size of the send buffer in bytes

// byte[] data contains the data to be sent.

var buffer = pool.GetBuffer(SEND_BUFFER_SIZE);

buffer.FillWith(data);

socket.BeginSend(buffer.GetSegments(), SocketFlags.None, SendCallback, buffer);

//...

//In the send callback.

private void SendCallback(IAsyncResult ar)

{

var sendBuffer = (IBuffer)ar.AsyncState;

try

{

socket.EndSend(ar);

}

catch (Exception ex)

{

//Handle Exception here

}

finally

{

if (sendBuffer != null)

{

sendBuffer.Dispose();

}

}

}

Receiving data:

/** RECEIVING DATA ASYNCHRONOUSLY **/

// const int RECEIVE_BUFFER_SIZE is the desired size of the receive buffer in bytes.

// byte[] data is where the received data will be stored.

var buffer = pool.GetBuffer(RECEIVE_BUFFER_SIZE);

socket.BeginReceive(buffer.GetSegments(), SocketFlags.None, ReadCallback, buffer);

//...

//In the read callback

private void ReadCallback(IAsyncResult ar)

{

var recvBuffer = (IBuffer)ar.AsyncState;

int bytesRead = 0;

try

{

bytesRead = socket.EndReceive(ar);

byte[] data = new byte[bytesRead > 0 ? bytesRead : 0];

if (bytesRead > 0)

{

recvBuffer.CopyTo(data, 0, bytesRead);

//Do anything else you wish with read data here.

}

else

{

return;

}

}

catch (Exception ex)

{

//Handle Exception here

}

finally

{

if (recvBuffer != null)

{

recvBuffer.Dispose();

}

}

//Read/Expect more data

var buffer = pool.GetBuffer(RECEIVE_BUFFER_SIZE);

socket.BeginReceive(buffer.GetSegments(), SocketFlags.None, ReadCallback, buffer);

}

There is a performance penalty involved when using the code above. Each time you perform a socket operation, you create a new buffer, use it and then dispose it. These actions involve multiple lock statements, and can become a bottleneck on the shared pool.

There is an optimization that can help mitigate this issue. The optimization is based on the way socket operations work. You can send data any time you like on a socket, but you typically receive data in a loop. i.e. receive data, signal that you are ready to receive more data and repeat. This means that each socket works with only one receive buffer at a time.

With this in mind, you can assign each socket a receive buffer of its own. Each socket will use its receive buffer exclusively for all its receive operations, thereby avoiding the need to create and dispose a new buffer for each receive operation.

You have to dispose the receive buffer when you close the socket.

I recommend the pattern below.

/** RECEIVING DATA ASYNCHRONOUSLY (OPTIMIZED) **/

// const int RECEIVE_BUFFER_SIZE is the desired size of the receive buffer in bytes.

// const int BUFFER_SIZE_DISPOSAL_THRESHOLD specifies a size limit, which if exceeded by a buffer

// would cause the buffer to be disposed immediately after a receive operation ends.

// Its purpose is to reduce the number of large buffers lingering in memory.

// IBuffer recvBuffer is the receive buffer associated with this socket.

// byte[] data is where the received data will be stored.

// object stateObject holds any state object that should be passed to the read callback.

// It is not necessary for this sample code.

if (recvBuffer == null || recvBuffer.IsDisposed)

{

//Get new receive buffer if it is not available.

recvBuffer = pool.GetBuffer(RECEIVE_BUFFER_SIZE);

}

else if (recvBuffer.Size < RECEIVE_BUFFER_SIZE)

{

//If the receive buffer size is smaller than desired buffer size,

//dispose receive buffer and acquire a new one that is long enough.

recvBuffer.Dispose();

recvBuffer = pool.GetBuffer(RECEIVE_BUFFER_SIZE);

}

socket.BeginReceive(recvBuffer.GetSegments(), SocketFlags.None, ReadCallback, stateObject);

//...

//In the read callback

private void ReadCallback(IAsyncResult ar)

{

int bytesRead = socket.EndReceive(ar);

byte[] data = new byte[bytesRead > 0 ? bytesRead : 0];

if (recvBuffer != null && !recvBuffer.IsDisposed)

{

if (bytesRead > 0)

{

recvBuffer.CopyTo(data, 0, bytesRead);

//Do anything else you wish with read data here.

}

//Dispose buffer if it's larger than a specified threshold

if (recvBuffer.Size > BUFFER_SIZE_DISPOSAL_THRESHOLD)

{

recvBuffer.Dispose();

}

}

if (bytesRead <= 0) return;

//Read/Expect more data

if (recvBuffer == null || recvBuffer.IsDisposed)

{

//Get new receive buffer if it is not available.

recvBuffer = pool.GetBuffer(RECEIVE_BUFFER_SIZE);

}

else if (recvBuffer.Size < RECEIVE_BUFFER_SIZE)

{

//If the receive buffer size is smaller than desired buffer size,

//dispose receive buffer and acquire a new one that is long enough.

recvBuffer.Dispose();

recvBuffer = pool.GetBuffer(RECEIVE_BUFFER_SIZE);

}

socket.BeginReceive(recvBuffer.GetSegments(), SocketFlags.None, ReadCallback, stateObject);

}

If using the pattern presented above, it’s important to remember to dispose the buffer when closing the socket.

You must explicitly dispose the buffer. It does not define a finalizer, so once a buffer is created, it stays allocated until explicitly disposed.

CONCLUSION

The BufferPool project is the first sub-project of the ServerToolkit project. It is an efficient buffer manager for .NET socket operations.

It is written in C# 2.0 and targets .NET 2.0 for maximum backward compatibility.

BufferPool is designed with high performance and dependability in mind. Its goal is to abstract away the complexities of buffer pooling in a concurrent environment, so that the developer can focus on other issues and not worry about heap fragmentation caused by socket operations.